So, you’ve heard about Cannoli, the high-performance tracing engine, but don’t know where to start. Perhaps you read the source code but don’t understand how to implement your analysis. Or maybe you’re someone who learns by example and finds inspiration in detailed walkthroughs. If so, this is the post for you. The following walkthrough implements a program backtrace and demonstrates how to harness Cannoli to serve your instrumentation needs.

Note: this blog post is not a replacement for, but rather a supplement to, the Cannoli GitHub readme quick start guide and Brandon Falk’s previous blog post detailing Cannoli internals and demonstrating performance considerations.

This post can be thought of as a detailed walkthrough of the “What to Do” section in the GitHub readme.

General Considerations

This section includes general considerations worth reviewing before starting any Cannoli project. If you are interested in the backtrace walkthrough, skip ahead to the Implementing a Backtrace section.

Project Configuration

The project’s Cargo.toml file configures the project, specifically the analysis process (client) and Jitter shared library (server). This blog uses the following template, where <project> is the project name, and therefore the name of the root project folder.

[package]

name = "<project>"

version = "0.1.0"

edition = "2021"

[dependencies]

cannoli = { path = "../cannoli/cannoli" }

jitter = { path = "../cannoli/jitter" }

[lib]

crate-type = ["cdylib"]

[[bin]]

name = "<project>"

path = "src/main.rs"

This structure assumes that the compiled Cannoli project (cloned and configured using the Cannoli GitHub repo) also exists in the parent directory; thus the ../cannoli/cannoli path resolves to the Cannoli source. Finally, the example defines the client in src/main.rs and the server (Jitter) in src/lib.rs. The rough directory structure follows, with notes in parentheses.

Note that the Cannoli GitHub repo contains a backtrace example as a member of the project, so the directory structure and Cargo.toml file differ slightly.

|-- <project>

| |-- .cargo

| | `-- config.toml (**needed to compile, see below**)

| |-- examples

| | `-- testcase

| |-- Cargo.toml

| |-- src

| | |-- main.rs (client source)

| | `-- lib.rs (server source)

| `-- target (after building the project)

| |-- release

| | |--<project> (compiled client)

| | |-- lib<project>.so (compiled server)

| | `-- ...

| `-- ...

`-- Cannoli (clone)

|-- cannoli

| |-- Cargo.toml

| |-- src

| `-- ...

`-- jitter

|-- Cargo.toml

|-- src

`-- ...

Note: compiling a new project requires the -asm-macro-max-nesting-depth flag in .cargo/config.toml, as shown below.

[target.x86_64-unknown-linux-gnu]

rustflags = ["-Cllvm-args=-asm-macro-max-nesting-depth=1000"]

Failure to include this file will result in the following error: error: macros cannot be nested more than 20 levels deep.

Creating a Client

This is the most substantial programming task and varies considerably depending on the instrumentation’s objective. That said, there are still important general considerations for the design of every client.

First, it is worthwhile to identify what process-wide information and static, pre-execution data the client requires. Process-wide information that changes during execution should be handled within the PidContext and should only be modified by the trace callback. The reason is that this PidContext can contain program state information and therefore should be handled in the trace callback. trace is called sequentially, that is, in the order of execution. Other callbacks such as read, write,regs, etc are executed asynchronously by the client so PidContext should be treated as immutable.

Pre-execution information or data that does not change during execution, such as static symbols, can be stored at the TidContext level for each thread. This information does not depend on state, and thus can be managed at the thread level for quicker access during asynchronous callbacks without lock contention.

Defining Server Hooks

It is important to consider the minimum information needed to achieve the objective when defining Cannoli JIT hooks, because including more information than is necessary results in wasted overhead. Cannoli offers two types of hooks:

fn hook_inst(_pc: u64, branch: bool) -> HookType {

HookType::Register

}

hook_inst: an instruction hook, called before an instruction is lifted in QEMU- Five options:

- Always - hook on every instruction and process using the

execcallback - Once - self-silencing, useful for coverage analysis. The instruction is ignored after the first hook (unless re-JITted, though that is an infrequent occurrence)

- Register - returns register information to the

regscallback - Branch - contains the same information as a

Registercallback and also includes a boolean indicating if the current instruction intends to branch - Never - never hook

- Always - hook on every instruction and process using the

- Five options:

fn hook_mem(_pc: u64, _write: bool, _size: usize) -> bool {

false

}

hook_mem: a memory hook, called during a memory access (read or write)- Two options:

- true - hook memory accesses

- false - never hook

- Includes

writeargument, which istruefor memory writes, that can be used to filter read / write operations sent to the analysis client

- Two options:

Choosing the appropriate option depends on the analysis’s goal. It is important to determine the minimum hooks necessary, as filtering at this stage speeds up both emulation and analysis.

Running the Client

The Cannoli client exposes a socket on 127.0.0.1:11458. Client code exists in <project>/src/main.rs, so running the client is typically as simple as cargo +nightly run --release.

Running the Emulated Process

Launching the process first requires following the the Cannoli GitHub readme to build Cannoli and then compile QEMU with Cannoli. Cannoli Jitter (server) source code typically exists in <project>/src/lib.rs, so cargo +nightly build --release compiles the shared library to <project>/target/release/lib<project>.so. Next, run the binary within QEMU using:

<path to QEMU with Cannoli>/build/qemu-<architecture> -cannoli <project>/target/release/lib<project>.so ./<binary>

It is important to ensure:

- The QEMU binary of choice is in a QEMU repository configured with Cannoli

- The QEMU architecture matches the binary’s architecture

Implementing a Backtrace

The goal for this use case is to implement a working backtrace that logs a program’s function call stack at every function entry and exit. This example targets a MIPS binary for simplicity; x86 binaries write the return instruction address to the stack rather than storing it in a link register, thereby complicating analysis. ARM binaries could be instrumented in a similar manner to this example.

Defining Hooks

It is important to break down the tracing requirements for this use case, as these define what callbacks the client implements. Identifying function calls and returns requires hooking instructions using hook_inst because analysis needs to assess pc to see if it enters or exits a function. HookType::Once and HookType::Always do not contain register information, so they do not suffice. HookType::Register is a tempting choice, but HookType::Branch contains additional information - a branching flag - which is vital for this analysis.

hook_inst hooks an instruction before it is executed by QEMU, and thus occurs on the branching instruction that jumps to the function start or return. This leads to some valuable insights:

- The

HookType::Branch’sbranchflag istruewhenpccontains the address of the jumping instruction, not whenpcis the first instruction in the function nor whenpchas returned to the return address - The link register is set after the branch

- Similarly,

pcis set to the return address when leaving a function after thebranchinstruction hook - Therefore,

branchistrueprior to every call and return, and for every branching instruction that jumps to another basic block within a function. Function calls and returns will be in the minority ofbranchs

These realizations dictate some of the analysis, and thereby how to structure hooks. The client should track function entries and exits using the following process:

- Hook every instruction and set a

PidContextflag if thebranchflag is true. This indicates that the next instruction should be evaluated as an entry or exit - For every instruction, check if the global flag is set. If so, check the current instruction for an entry or exit condition

- Entries are when the

pcvalue contains a function’s start address - Exits are when the

pcvalue matches the expected return address

- Entries are when the

- Maintain a stack of return address pointers to do the above comparison for exit conditions

- Push entries to the stack when entering a function

- Pop entries from the stack when exiting a function

- If the global flag is not set and the current instruction is not a branch, return immediately

The client must next evaluate the hook_mem condition. Thankfully, this is a much easier decision. The above requirements completely define how to log functions calls and returns, so no additional memory read/write hooks are needed. Note: write hooks are required for x86 programs since the call instruction increments and pushes the return address to the stack, and therefore cannot be retrieved from registers.

These decisions result in the following src/lib.rs file:

use jitter::HookType;

#[no_mangle]

fn hook_inst(_pc: u64, branch: bool) -> HookType {

HookType::Branch

}

#[no_mangle]

fn hook_mem(_pc: u64, _write: bool, _size: usize) -> bool {

false

}

Defining Analysis

The previous section defined the high-level analysis, but implementation requires attention to a number of details. This starts by defining the PidContext and TidContext structures. The Creating a Client section outlines some specifics that are important to consider when populating these contexts. Again, TidContext is a context populated at the initialization of each thread, and is held by an individual thread. This is most useful for process information that does not change during execution. Conversely, PidContext maintains process-wide information and is only mutable in the sequential trace callback; manipulating in any of the asynchronous callbacks would jeopardize correctness in state.

Defining PidContext, TidContext

Armed with this knowledge, information can be categorized into the appropriate structures:

- Symbols, to match

pcwith a function start address →TidContext- Rationale: does not change during the process, threads can hold individually

- Branch flag, to know whether to investigate the next instruction →

PidContext- Rationale: this must be maintained at the process level because it is a process-wide flag

- Backtrace, holding the stack of function calls →

PidContext- Rationale: extends across analysis threads and is a process-level structure

- Return address stack, holding a stack of return addresses to identify function exits →

PidContext- Rationale: same as backtrace above

This analysis results in the following PidContext level structs:

pub type Backtrace = Vec<(&'static str, u64)>;

pub type ReturnStack = Vec<u64>;

pub struct BacktraceState {

pub backtrace: Backtrace,

pub return_stack: ReturnStack,

pub branch_flag: bool,

}

impl Default for BacktraceState {

fn default() -> Self {

Self {

backtrace: Vec::new(),

return_stack: Vec::new(),

branch_flag: false,

}

}

}

pub struct PidBacktraceCtx(pub Mutex<BacktraceState>);

Note that the PidBacktraceCtx’s BacktraceState parameter is wrapped in a Mutex to ensure accesses are done sequentially.

The TidContext is simpler, and just maintains a list of binary symbols and addresses to determine function entries and backtrace symbol names.

pub struct TidContext {

// Lookup from an address to a symbol, stored in sorted order

pub symbols: Vec<(u64, &'static str)>,

}

Both these structs and their implementations are stored in a separate module, src/utils/backtrace.rs, since they are specific to the backtrace analysis.

Implementing Cannoli

The next step is to define the Cannoli struct, BacktraceExample, and define its Cannoli trait, init_pid, and init_tid methods. init_pid creates a new BacktraceState (empty vectors and false flag), while init_tid loads a symbol file symbols.txt (generated from the nm unix command) to get a vector of tuples: (start address, symbol name).

struct BacktraceExample;

impl Cannoli for BacktraceExample {

type Trace = Trace; // more on this in a minute

type TidContext = TidContext;

type PidContext = PidBacktraceCtx;

fn init_pid(c: &cannoli::ClientInfo) -> Arc<Self::PidContext> {

Arc::new(

PidBacktraceCtx(Mutex::new(BacktraceState::default()))

)

}

/// Load the symbol table

fn init_tid(_pid: &Self::PidContext,

_ci: &cannoli::ClientInfo) -> (Self, Self::TidContext) {

// Symbols

let mut symbols = Vec::new();

// Load the symbol file up and leak it so all the lifetimes are static

let data = std::fs::read_to_string("symbols.txt").unwrap();

<snip>

// Sort the symbols by address

symbols.sort_by_key(|x| x.0);

(Self, TidContext {

symbols,

})

}

Now that the BacktraceExample struct has a defined PidContext and TidContext, the next step is handling callbacks and implementing the analysis logic.

Handling Callbacks

As discussed previously, the jitter generates a single hook type, HookType::Branch, which calls the branch callback. This callback is fairly simple, it extracts the return address, ra ($31 in MIPS), from the register state and resolves pc into a symbol using the TidContext. This function cannot set the global PidContext branch_flag boolean; any synchronous operations that depend on program state, such as setting or checking the flag, must be performed in Cannoli's trace. Therefore, the function ends by adding an event to the sequential trace for further processing.

In order to use the trace, a type is required in PidContext (this was glossed over previously). There is only one trace event type required for this analysis, Trace::Branch. Its member variables include the branch flag, the return address, and the symbolized pc.

enum Trace {

Branch {

branch: bool,

pc: SymOff,

ra: u64,

},

}

This Trace type is included when implementing Cannoli for BacktraceExample, as shown earlier. The final action in the branch callback is to push this trace event for linear processing.

No additional jitter callbacks are required, as this analysis does not capture memory hooks with hook_mem.

Parsing the Trace

Finally, BacktraceExample must process the sequential trace of events in the trace callback.

The first action is simple: check if the PidContext branch flag is already set. If not, no further analysis is required as the current instruction is neither the beginning of a basic block nor the return from a function. In this case, skip over all additional analysis and inspect the current event’s branch boolean, updating the global branch_flag if required.

If the prior event in the trace was a branch, further analysis is required to determine if the current instruction is the entry or exit of a function, or simply a jump to a different basic block within a function. First, it checks if pc matches a function entry by comparing the symbol offset using SymOff’s is_entry() member function. If so, the program updates the backtrace by pushing the current symbol and the return address to corresponding stacks. Finally, it logs the event.

The client next checks whether the current address matches the top of the return stack using PidBacktraceContext’s is_return() method. If so, it pops from both the backtrace and return address stacks and logs the event. Note, this must be done in a loop since MIPS implements b instructions that branch without linking, so the return address might be the same for sequential functions in the call stack.

The final product is highlighted in the source code, here.

Create Cannoli

The last (and easiest) step is to create the Cannoli struct and run the analysis with whatever number of threads is desired. This example uses four, though this should be tuned according for each analysis and hardware.

fn main() {

create_cannoli::<BacktraceExample>(4).unwrap();

}

Launch the Analysis

With the analysis client defined, launch the client application from the project directory using:

cargo +nightly run --release

Or by running the binary directly:

./target/release/backtrace

It should compile and wait, as the client listens for a Cannoli server emulating the target binary to connect.

Note: don’t forget the .cargo/config.toml file in the project directory as mentioned in the Project Configuration section above!

Emulate and Hook

The final step, if all else went well, is to spin up the target binary in the Cannoli-instrumented QEMU:

<path to QEMU with Cannoli>/build/qemu-mipsel -cannoli ``pwd``/target/release/libbacktrace.so ./backtrace_test_mips

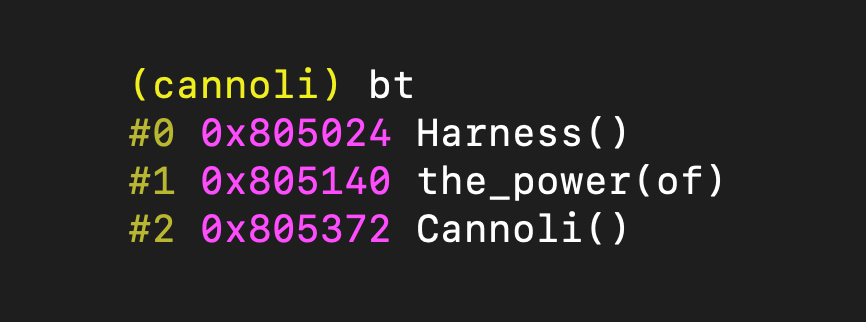

The server and client connect, and the analysis client dumps a new backtrace event at every function entry and exit!

<snip>

{

"backtrace": [

[

"__libc_start_main",

4196956

],

[

"__libc_start_call_main",

4196732

],

[

"main",

4196452

]

]

},

{

"backtrace": [

[

"__libc_start_main",

4196956

],

[

"__libc_start_call_main",

4196732

],

[

"main",

4196452

],

[

"function_one",

4196356

]

]

},

{

"backtrace": [

[

"__libc_start_main",

4196956

],

[

"__libc_start_call_main",

4196732

],

[

"main",

4196452

],

[

"function_one",

4196356

],

[

"puts",

4230288

]

]

},

<snip>