In 2019, Sophia d’Antoine and I gave a presentation at SummerCon in which we explored threats relating to social media astroturfing with the intent of conducting disinformation campaigns. We reviewed known attacks and presented our framework for studying disinformation campaigns. Since then, this field has developed considerably alongside our investigations into novel attack surfaces introduced by manipulating the social dynamic in digital spaces.

In this blog post, I’ll be circling back on some of the techniques we observed and how they have developed since then. I’ll also review the mechanisms supporting IO attacks and how those mechanisms have been mitigated (or ignored) by their respective platforms. Finally, I will discuss the future work that Margin Research is conducting in this space alongside DARPA and similar work from the academic world constructing new frameworks for analyzing social media manipulation in the burgeoning field of Social cybersecurity.

Prior campaigns versus new campaigns

In 2019, we found that traditional approaches to countering information operations (IO) rely far too heavily on leveraging disparate Open Source Intelligence (OSINT) techniques to profile the critical components of a campaign (Operators, intentions, techniques, etc.). Such methods can be unreliable and encourage the fake bot accounts that support these campaigns to optimize toward behavior that will eventually become harder to track. We outlined how observable IO campaigns are discovered and fail and explored techniques that have already begun to be used by more sophisticated actors.

It was our position that by analyzing the mechanisms that enable information operations, analysts can begin to get a handle on how to mitigate their impact. Focusing on technical signals enables analysts to manipulate the effectiveness of campaigns and disrupt operations as they occur, rather than after the fact. Some simple examples of these techniques include user agent swapping, headless browsers, and scrambling standard header orders. More sophisticated campaigns involve leveraging human actors augmented by bot activity; they may consist of things like hacked home routers operating as residential IPs, phone apps that tells you when and what to post, incentivized traffic, sock puppet accounts impersonating celebrities, or paying 500 soldiers to use devices distinct from their personals to amplify content.

By having an intimate understanding of the mechanisms that support IO campaigns, you can intelligently implement countermeasures that confuse and disrupt the operations in real-time or, at the very least, catch them next time. For example, if you find a way to detect an ongoing campaign dynamically, your intuition would tell you to flip the switch. The attack stops, and the problem goes away. However, advanced and persistent adversaries will not throw in the towel after one round. They will figure out what got them caught, fix it, and then keep going. Instead, we must treat this as a chess game and plan several steps ahead. Maintaining an ongoing list of signatures that independently track a campaign will allow us to flip a more pervasive switch on the adversary. Once we observe the first bug mitigation, we can burn another indicator and keep the pressure on. Alternatively, we might decide to flip their operation off and on again. Their attack goes down for an hour but comes back again, or it perhaps is disabled at inopportune times. If threat intelligence suggests they’re active during particular times each day, we might allow things to work during those hours and then turn it off the rest of the time. The only way to defeat a threat is to impose costs on the adversary. The most effective way to impose costs on the adversary is to take the time to understand the costs associated with their tools.

Work that has been done to inhibit these attacks

In 2018, Mark Zuckerberg famously rebuked Sen. Orrin Hatch’s attempted “gotcha” question: “..how do you sustain a business model in which users don’t pay for your service?” stating simply, “Senator, we run ads.” In 2019, Facebook temporarily paused ads related to social issues, elections, and politics the week before the US election. Meanwhile, Twitter blocked all political advertising on its platform. There is perhaps no more remarkable testament to a hard problem than seeing major platforms afflicted with the disinfo flu, paralyzing their primary monetization strategy in fear of information operations leveraging them as a disinformation strategy. Are we to expect social media platforms to castrate themselves every time a local election occurs periodically? I have often said that Facebook is a victim of its success in this regard. They created a platform that could not have anticipated the full scope and impact of disinformation campaigns. They are, earnestly albeit frantically, applying duct tape to the Jenga tower they built their house on top of. Fundamentally, the problem of disinformation is a human one supported and amplified by social media due primarily to its very nature of spreading and disseminating knowledge. The enabling element is impossible to mitigate short of these platforms disappearing entirely.

What we have observed since 2019 is a rising standard of making information operations not impossible but rather more expensive to conduct. Rather than try and tackle the issues fundamentally enabling disinformation, platforms focus on targeted takedowns of sophisticated actors by banning the affiliated accounts outright, or anticipating high-risk events, such as the 2020 election in Myanmar, and implementing media literacy programs to try and mitigate the damage. The costs associated here are wildly asymmetric and not in facebook’s favor. Focusing on big fish requires leaning heavily on AI/ML models to flag known problematic symbols and phrases for smaller communities. But it falls short of finding smaller, high-risk communities developing by their own accord.

You do not need 40,000 people following your “let’s shoot the cops” Facebook page to radicalize someone into committing an act of terror. Social media as an apparatus concerns itself with acquiring new users and forming new communities, and that compulsion is what adversaries exploit. These communities do not need to be large; they need to be focused. A study in 2018 found that a bot-led influence campaign in Ukraine could hijack a small community of men who had been posting similar provocative images of women online. By tagging groups of these men in similar posts, the bots motivated the formation of a community focused specifically on this topic, where the bots were the consolidating element and community leaders. The bots then began to periodically send out messages related to obtaining weapons and becoming involved in the conflict. Communities on the internet are inherently topic-oriented, and this technique can be used to hijack any of them, become the arbiter of content within that community, and then disseminate uniquely violent rhetoric.

Future of the space

Interestingly, there have been prevailing dichotomies in this space. In 2019, Margin Research contextualized IO as either centralized or decentralized operations. Centralized operations hinge on the whims of an influential consolidating figure or account to disseminate information versus decentralized campaigns which leverage high volumes of distributed, potentially anonymous, accounts that present an overwhelming narrative.

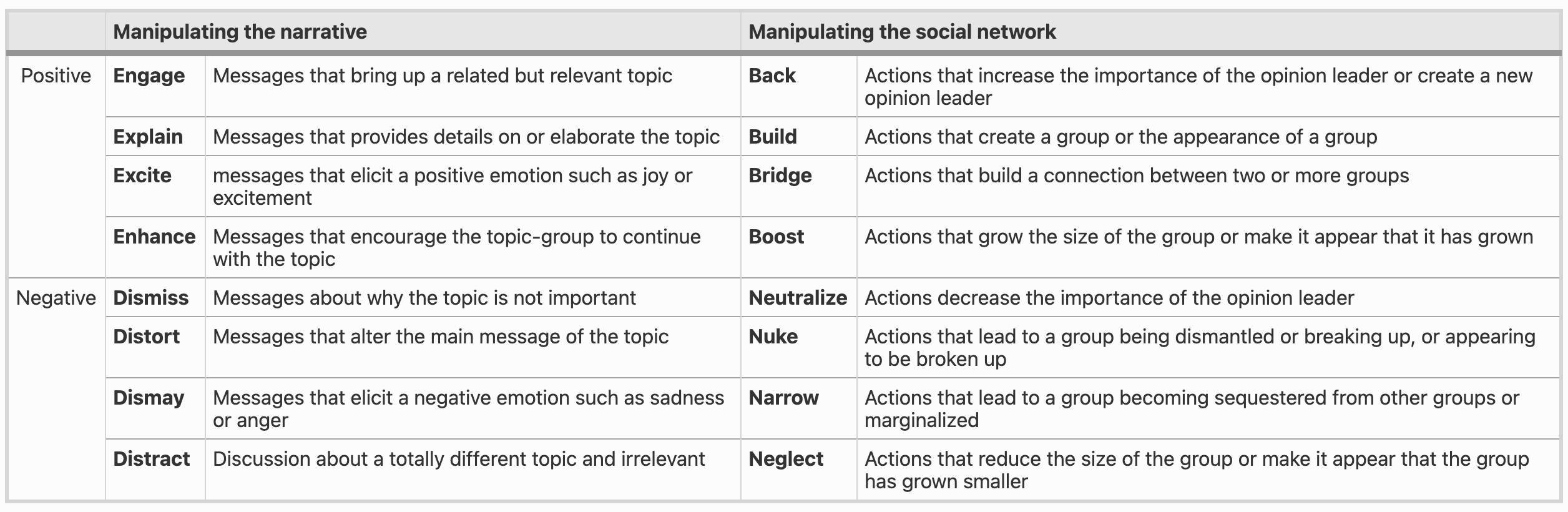

The BEND Framework proposed in November last year introduces an alternative dichotomy that suggests conducting IO attacks requires using narrative and structural maneuvers. By leveraging both positive and negative maneuvers in social environments, you may influence a given community in a wide variety of ways. One of the things I like most about the BEND framework is its platform agnosticism. At SummerCon, we were considering threats pertaining to major social media platforms. However, BEND considers the social element that drives IO campaigns and identifies fundamental techniques that support them.

At Margin Research, we have been working closely with DARPA to apply these frameworks, techniques, and threat models to the high-impact space of open source development communities. We have theorized and observed that strategically interfering with the social component prevalent in this space allows adversaries to inject vulnerabilities into major open source projects. These projects range from the Linux kernel to Firefox and, in some cases, smaller 3rd party libraries. There is no established trust metric by which you can vet accounts or individuals that submit code based on code artifacts, commit quality, historic community influence, and affiliations. We believe that by analyzing open source communities, social influence over source code changes, and contributor behavior as a developer and as a community member, we can defend these targets and ensure the integrity of open source ecosystems and supply chains.

I hope to share more insights regarding this novel attack surface as we discover them. I also plan to expound more on some of these subjects in greater detail over the next few months.